Hoping in iOS 18 that the Weather App radar actually shows rain. 🤞

App Groups, macOS and Extensions

This is one of those ‘note to self’ blog posts. I’ve been working on the next round of features for GlowWorm including a desktop widget. The GlowWidget, like every widget ever, needs to share some data with the main app. Widgets are all sandboxed and locked down, so we need to use an App Group to enable sharing between the extension and the main app. This is where it gets weird.

The internet is full of info on how to set up app groups. It starts by going to the developer portal, creating a group and adding it to the app identifier. Groups created in the dev portal are always prefixed with group.. Once you’ve got that set up, you can add the App Group in the capabilities section of the Xcode project. Here’s the rub! For a Mac app, Xcode enforces that the app group begin with the developer’s Team ID which looks something like JQ49SEDXYZ. So… does it start with group. or does it start with JQ49SEDXYZ.?

The key info is summed up in this SO post and sort of in the docs. It turns out that Mac and iOS configs are completely different! The dev portal and the group. prefix is only for iOS. None of that needs to be done for Mac (like nothing at all in the dev portal). On the Mac, you just need to use the Team ID prefix and that’s it. The only setup is in the Capabilities section of Xcode, where you add an app group with your Team ID prefix, bundle ID and whatever suffix you’d like. For a bundle ID of com.example.app, you might make the app group JQ49SEDXYZ.com.example.app.shared.

Now that you’ve got an app group, make sure you’ve added that app group capability to all the relevant targets. For GlowWorm, it needs to be added to the main app as well as the widget. There are a couple of ways you can share data between the two targets now, but initially we’re only interested in sharing UserDefaults. To do that, you need to create an instance of UserDefaults explicitly associated with the app group. Using the example app group we’ve set up, you can create the shared instance with UserDefaults(suiteNamed: "JQ49SEDXYZ.com.example.app.shared").

And with that, we’re in business! Both the main app and the extension can access the data in the shared user defaults. Now we just have to make it look pretty. 🙈

Back in 2007, some weird stuff happened and I ended up with a VIP badge for WWDC so I got to see Steve do a keynote up close!

Ugh! Burned again! Looks like the HomeKit API is only available on macOS for Catalyst apps! AFAIK, there aren’t even any hoops to jump through to embed Catalyst bits inside an AppKit app. AppKit inside Catalyst works, but not this way round. Harumph.

I haven’t used the new Netflix app yet, but this auto-expanding tile thing has been terrible in every other app that has tried it.

Netflix just revealed a major redesign for its TV app - 9to5Mac:

The new look replaces the static tiles containing the shows and movies you want to watch with boxes that extend as soon as your remote lands on them.

My CI build failed today because it couldn’t find two files. After 10 minutes thinking I botched a git merge, I discover that no, git is fine. Xcode decided to create those files in my Downloads folder instead of the project directory. Thanks for that Xcode. You’re the best!

2010 11” MacBook Air vs 2024 13” MacBook Air

I was thinking [about netbooks recently]() as well as a [portable device for developers]() and I was fondly recalling my beloved 11” MacBook Air I had about 10 years ago. That thing was fantastic. The display was short but wide, so it was excellent for meetings since you could easily look over the top of the screen. Battery life was good (for the time) and I never felt like I needed more ports. In my rose colored recollection, the 11” was tiny, but I wondered how that actually compared to Apple’s smallest notebook today.

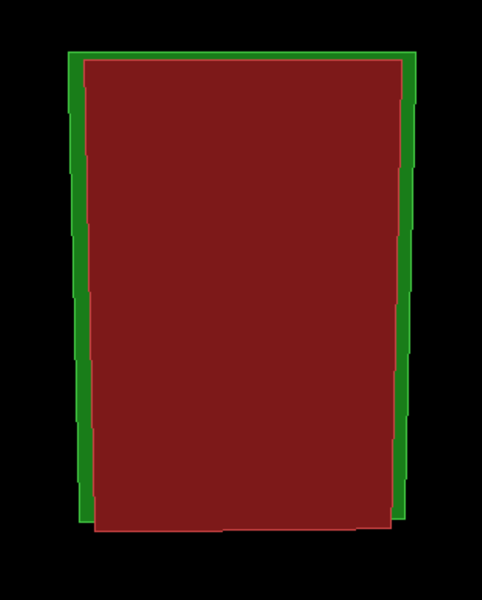

Here’s the overlay with the 13” M series MBA in green and the vaunted 11” in red. There’s not that much difference! The 13” is ~0.8 inches deeper and only about 0.2 inches wider. The 11” had the classic Air wedge shape and was actually about 50% thicker than the 13” at the butt end.

Looking at the 11”, you can see how much space it had to cede to bezels, which have all but disappeared in today’s MBA. The display has gone high res too. The screen resolution has essentially doubled in both directions going from just over one megapixel of screen real estate to well over four megapixels.

So Apple’s smallest laptop is still pretty small! The 13” moniker fooled me into thinking it was bigger, but those disappearing bezels have let the physical dimensions of the machine shrink down to just a tad bigger than the display. I bet I can even still see over the screen in a meeting.

The best iPad for a developer? The MacBook Air.

When the iPad was released back in 2010, there was a sort of implied hierarchy of iPhone → iPad → Mac. As you stepped from one to the next, the device became less portable, with a bigger screen and more power. And the price went up. In the middle spot, the iPad was clearly better suited than the iPhone for “work”, but didn’t measure up to the Mac for capability. I’m a Swift developer and an old Linux geek, so the iPad has never fit into my life in a significant way. It won’t run Xcode. Other solutions to write code usually involve a remote server somewhere. The terminals are crippled by security restrictions. 14 years after release, these limitations of the iPad haven’t really changed.

What has changed is the iPad is now as powerful as a Mac and just as expensive. The base model MacBook Air has an M2 processor and 256GB of storage, available for around $1000. Compare that with a mid-range iPad Air, the new M2 model, with 256GB of storage. Add a Magic Keyboard and that clocks in at right at $1000. Exactly the same price! That old hierarchy has fallen apart. The iPad is no longer cheaper and also no longer less powerful than a Mac. (At least in terms of hardware, software is another story.). But for me, as a developer, the iPad still doesn’t do the things I need, so if I want a small(-ish) portable device that I can use for email, web and do a little work on, I’m going to go for the MacBook Air, no question. The craziest part is that WalMart and BestBuy are selling the older MacBook Air for ~$700! Not the top of the line, but it’ll have no problem with email, web and streaming. And it can run Xcode and a real terminal, all with great battery life. Turns out, in 2024, the best iPad for a developer is an MacBook Air.

Whatever happened to subnotebooks? Those were the tiny laptops you could find in the 90s and early 2000s. The form factor was general a ‘just big enough to be usable’ keyboard and a whatever sized screen matched the footprint. I had a Toshiba Libretto which was about the size of a VHS tape that I carried around in a DiscMan case. There were others like the Sony PictureBook and the Gateway Handbook. I guess the market was eaten netbooks that turned into ChromeBooks which are now basically just the cheapest thing you can build with a 12” screen and a keyboard. Ah, the adventure of booting linux from an external floppy drive. Those were the days!

Less branching → Better code

Interesting confirmation of a gut feeling I’ve had for a while. With UIKit on iOS, I see a lot of developers write code that updates bits of the user interface as inputs change. As the code grows, the execution path becomes a convoluted flow chart of branches and conditional updates. Secondary dependencies often get lost, giving rise to bugs that can take hours to track down. And then you’re not really sure that you didn’t break something else with the “fix”.

But you can ditch all this complexity by just updating the whole interface every time. When the view opens, update the interface. When the user checks the box, update the interface. When the network query returns data, update the interface. Now you’ve got a simple linear method that does all the work which is both less likely to have logical errors and easier to reason about if any errors do occur. Through no coincidence, this is also how SwiftUI works.

I’ve written more tests than just about… | justin․searls․co:

... the single most important thing programmers can do to improve their code is to minimize branching (e.g. if statements)...

I’m shopping for an office desk and thought I’d check staples.com. Open up the app and it demands I download their new app. Okay whatever. New app is literally just a web view for staples.com, complete with the app banner to open the app. That I’m already in. LOL.

Last week’s Google: how to add git submodule

This week’s Google: how to remove git submodule

And the circle of life continues…

I’m working on breaking components out of my Swift project into local SPM packages. I’m really starting to like the architecture. But I’m having trouble with testing. I’d like to ⌘-T in Xcode and run both the app unit tests and the SPM tests, but I can’t make it work. All the posts online redirect back to this answer: developer.apple.com/forums/th… But when I try to follow along, the SPM test targets just aren’t in the list. Anyone have a clue?

In general, I’m skeptical about AI code generation, especially in the hands of inexperienced engineers who think it’s smarter than they are. But when you need it to do a menial thing? Fantastic! For a Swift project, I needed a bunch of constants that I found on GitHub in a Python file. I pointed ChatGPT at the URL and asked it to generate the corresponding Swift enum, but change the keys from snake case to camel case. And… it just did it! I think it might even be right.

TIL - the term ‘split horizon’ DNS. It’s a thing I’ve been doing for 20+ years to manage internal vs external DNS. I always called it ‘DNS jiggery pokery’, but I guess this is better.

I’m 5+ years late to this party, but I finally started playing Breath of the Wild back in the fall. Videos always made the combat seem complicated, but it really isn’t. The complexity the world is amazing. I watched a cut scene where Zelda stood up and dusted the dirt off her pants. My brain is still trying to reconcile that level of detail with 8-bit blob at the end of the NES edition.

I’ve loved this game so much I hate to actually finish it. But it’s such a big thing, I don’t want to start Tears of the Kingdom right away. But I’ve still got a couple of shrines to find. And I’ve never been in battle on a horse.

Made a lot of progress yesterday with reverse engineering the messaging protocol for a USB powered light. Turns out Wireshark is not just for network snooping!

So if you’re wearing a Vision Pro and you see someone else wearing a Vision Pro, you just see the headset, right? Somebody at Apple is furiously working on recognizing other headsets and replacing them with Personas.

Our first Mac was a purple gumdrop G3 iMac DV with MacOS 9. We were in grad school at the time and my wife used it to write her thesis in Word. The iMac was a fantastic little machine, but Word would occasionally center her entire document so there was cursing and crying. I don’t think we ever had anything fancy enough to plug into those FireWire ports. #MyFirstMac

It’s the kind of day where you have to disable System Integrity Protection. I’m sure this’ll all turn out fine.

Somebody nicked our credit card number and Bank of America sent new cards. Cool. Then I have to manually update Apple Wallet on like 6 different devices… not as cool.